Learning Design & EdTech Portfolio

Designing for students who disengage quietly: feedback loops, psychological safety, and practice environments that make revision feel normal.

I am an undergraduate Computer Science and Data Science student who thinks in learning cycles. Most of the work below was built around students who go quiet when they feel behind. Each project answers three questions:

- Who was I designing for?

- What changed in the learning experience?

- How would I evaluate or improve it next time?

CS Club Workshop: Imposter Syndrome and Internship Anxiety

Designing a safer space so students show up and stay.

Context & Learners

- Setting: Tech at NYU CS club, early undergraduates (mostly first- and second-year students).

- Learners: Students worried about "falling behind" in recruiting and feeling like outsiders in CS.

Problem

Attendance was shrinking. The students who showed up most were already confident. The ones who needed support the most were drifting away, often saying some version of "I am too behind to even be here."

Learning Goals

- Help students name imposter feelings instead of hiding them.

- Replace vague panic with specific next steps (applications, resumes, practice structure).

- Increase willingness to attend events and ask questions.

Design & Implementation

- Anonymous Q&A form so students could surface real fears without exposing themselves.

- Small-group discussion prompts that normalized insecurity instead of centering only "star" students.

- Closing checklist with 3–5 concrete actions (for example: apply to a set number of roles weekly, book one office hour, find one resume buddy).

Evidence / Evaluation

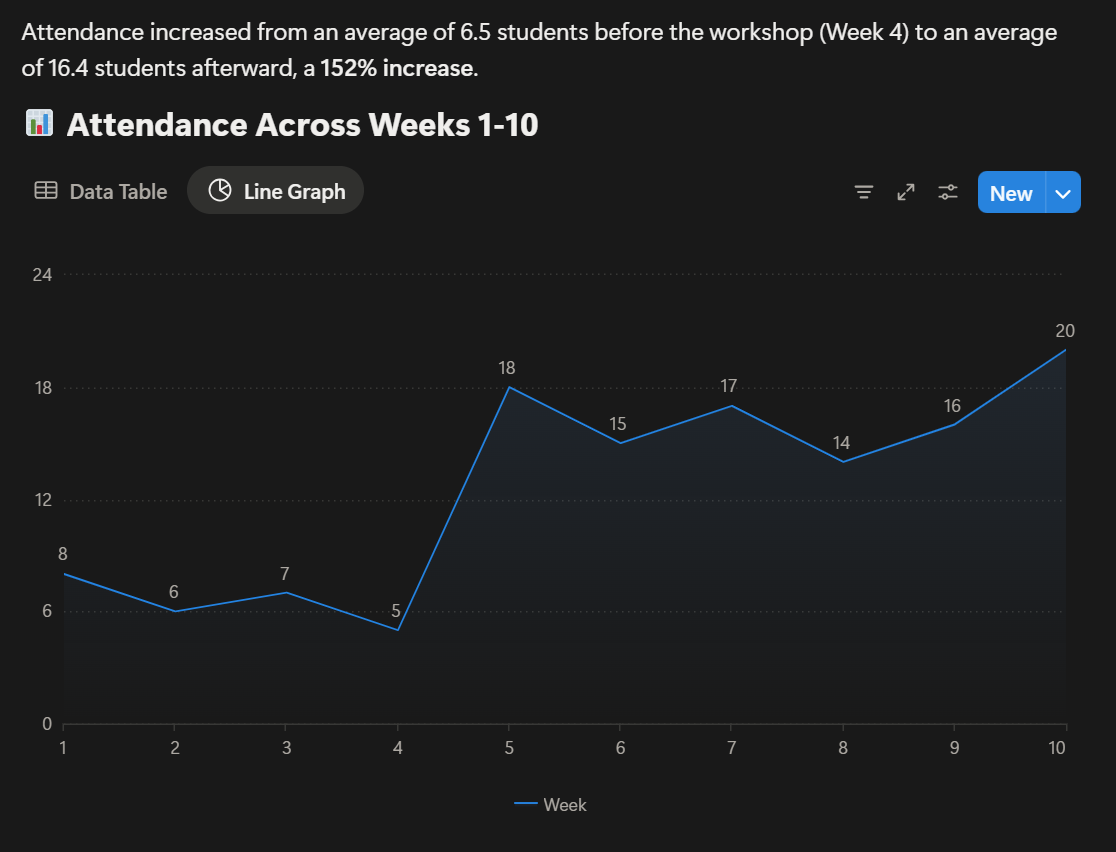

- Attendance tracking: average attendance almost doubled from 6.5 students before the workshop to 16.4 students afterward, representing a 152% increase.

- Qualitative comments from participants:

- "It helped to see that other people were just as worried as I was."

What I Would Change Next Time

- Add a short pre- and post-workshop confidence check (1–5 scale).

- Follow up with focused micro-sessions on specific topics such as cold emailing and resume review.

Realtime AI Debate Platform

Rubric-based feedback so quieter debaters can practice without performing.

Context & Learners

- Setting: Hack@Brown University 2025; prototype web application.

- Learners: Students practicing basic debate and argumentation who hesitate to speak up in live settings.

Problem

In traditional debate practice, more extroverted students dominate. Quieter students get less practice and almost no targeted feedback.

Learning Goals

- Give students a low-pressure space to practice arguments.

- Provide specific feedback on evidence, structure, and rebuttals.

- Encourage revision instead of one-shot performances.

Design & Implementation

- Students respond to common prompts (for example: chores at home, school uniforms) inside the app.

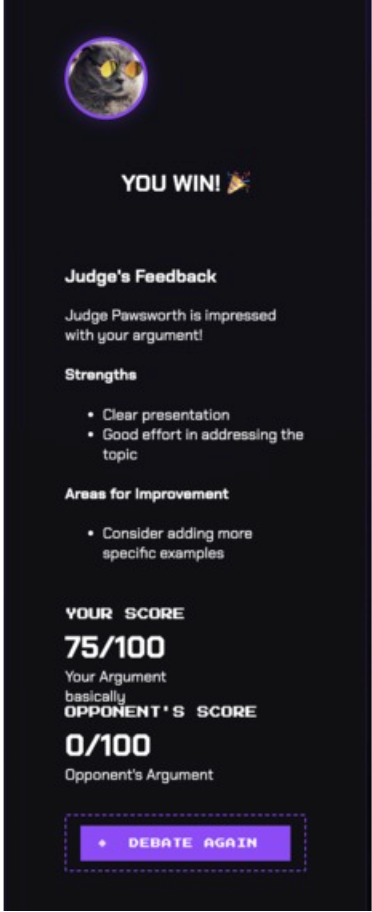

- The app uses an AI model to score along rubric dimensions such as evidence quality, clarity, and rebuttal strength, and returns concrete suggestions.

- The rubric was iterated so comments turned into actions. For example, "Strengthen evidence" became "Add one credible source and connect it to your claim with one sentence of reasoning."

Evidence / Evaluation

- Early feedback from peers at the hackathon highlighted that the tool made it easier to see what to fix without feeling judged in front of a crowd.

- If I had more time, I would test it with 10–15 students using pre- and post-prompts scored with the rubric, and brief questions about confidence and usability.

What I Would Change Next Time

- Add an explicit revise-and-resubmit loop directly into the interface.

- Include a simple confidence check after each round.

- Involve a debate coach or teacher to co-design rubrics for specific classes.

Revise-and-Resubmit Feedback Loop for Early CS and Argumentation Learners

Turning feedback into a habit, not a verdict.

Context & Learners

- Early CS students (explaining code or algorithms) and early argumentation learners (short written arguments).

- Target group: students who stop asking questions and quietly disengage when they feel behind.

Problem

Many students receive feedback once, feel judged, and then stop revising. Quiet disengagement is hard for teachers to see and even harder to catch early.

Learning Goals

- Help students treat a first answer as a draft, not a final grade.

- Practice using feedback to make specific changes.

- Track confidence and persistence over a short series of practice rounds.

Design & Flow

- Student receives a prompt (for example, "Explain how this loop works" or "Should students have to do chores?").

- Student submits a short response.

- The system provides rubric-based feedback on dimensions such as claim, evidence, reasoning, and clarity.

- Student revises their response.

- Student writes a brief reflection (1–2 sentences) on what they changed and why.

Rubric Snapshot

| Criteria | Needs Work | Developing | Strong |

|---|---|---|---|

| Claim clarity | ○ | ○ | ○ |

| Evidence | ○ | ○ | ○ |

| Reasoning | ○ | ○ | ○ |

Example (Summarized)

- Initial response: A simple argument with limited evidence.

- Feedback: Comments pointing out where evidence was missing and where reasoning was unclear.

- Revised response: Now with a specific source and one clear sentence connecting the evidence to the claim.

- Reflection: One sentence about what changed and why.

Evaluation Plan

- Small pilot with around 10–15 learners.

- Pre- and post-practice prompts scored with the rubric.

- Confidence questions (1–5) before and after.

- A few short survey items or brief interviews to understand how the feedback felt and what helped.

What I Learned

Designing the flow clarified my thinking about what revision actually requires: not just re-doing, but noticing what changed and articulating why. Next time, I would simplify the rubric to three core dimensions and consider adding a peer feedback option so students can see how others approach the same prompt.

Journaling as Data: Modeling Emotional Trajectories

Five years of entries as a lab for motivation and persistence.

Context & Learners

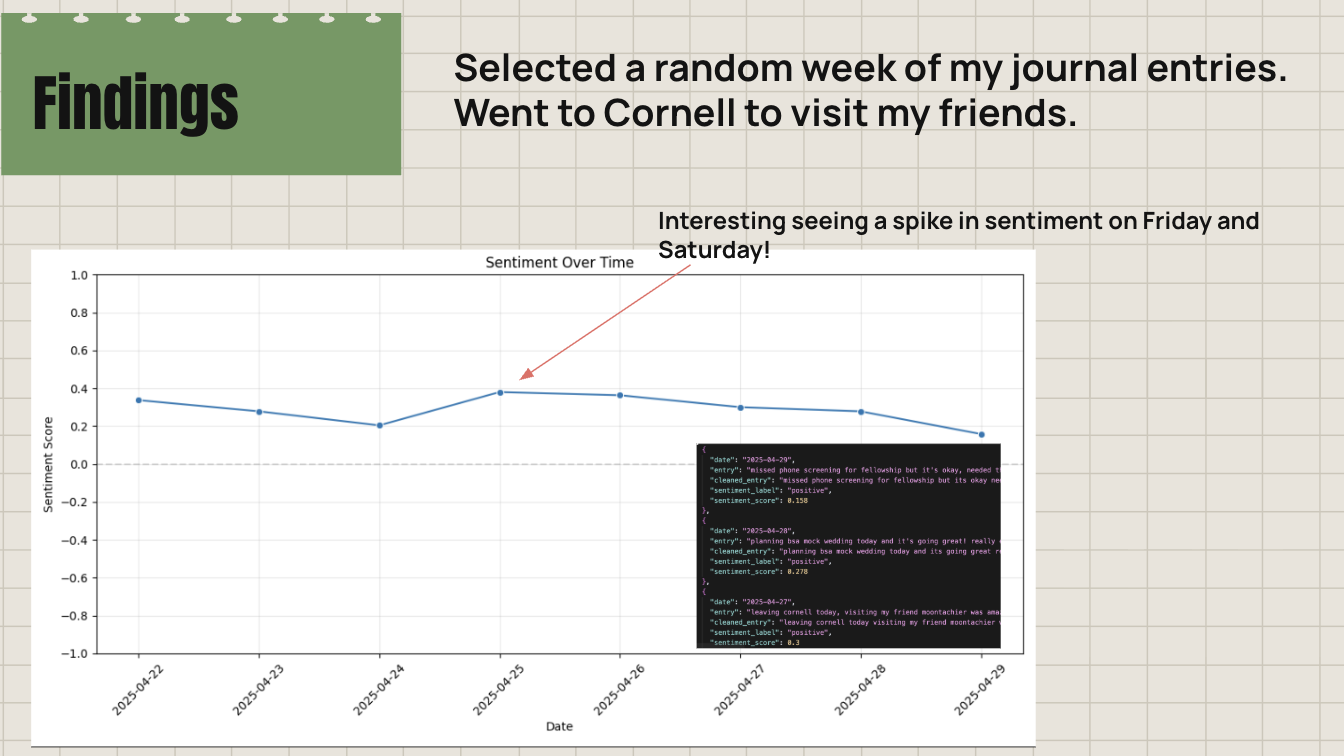

- Personal journaling dataset: more than 2,000 entries over five years.

- Treated as a way to study how motivation, confidence, and mood fluctuate over time.

Problem / Question

How do motivation and confidence actually change over long periods of study, and what patterns might matter for how we support students?

Approach

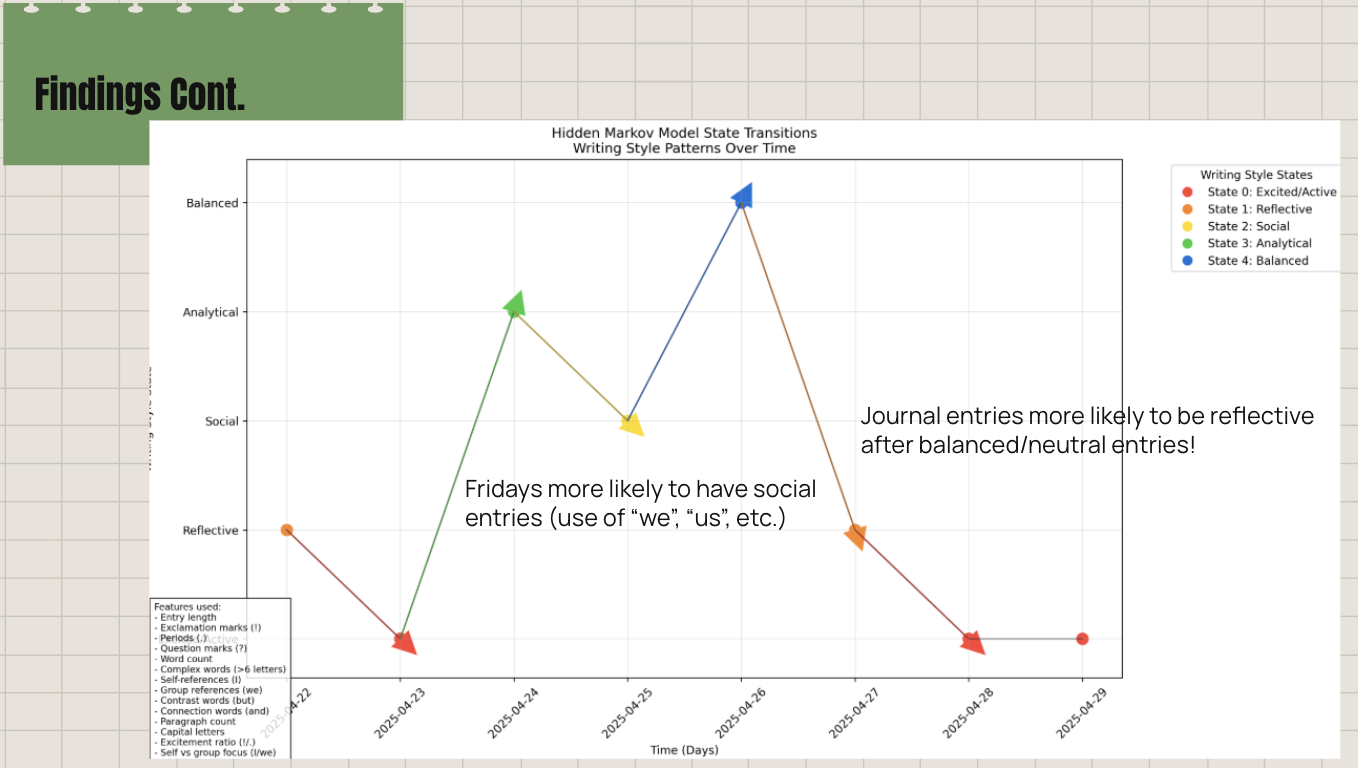

- Applied simple sentiment analysis and sequence modeling (for example, Hidden Markov Models) to track shifts in mood and motivation states.

- Looked for transitions into "avoidant" or "burnt out" states and which events seemed to precede them.

What It Taught Me

- Motivation is rarely linear; it moves in cycles.

- That insight now shapes how I think about learners: I assume fluctuation and design for recovery, not perfection.

I built these projects as a student trying to keep quieter learners in the conversation, including my past self. Over time, I want to deepen the learning science and evaluation side of this work so I can design and test interventions with more rigor and reach. Until then, this page is my running record of what I am learning about how people stay, or drift, in a learning environment.